WOVEN ARMS

MIXING DATASETS + 3D IMAGING

BY: ME + AI

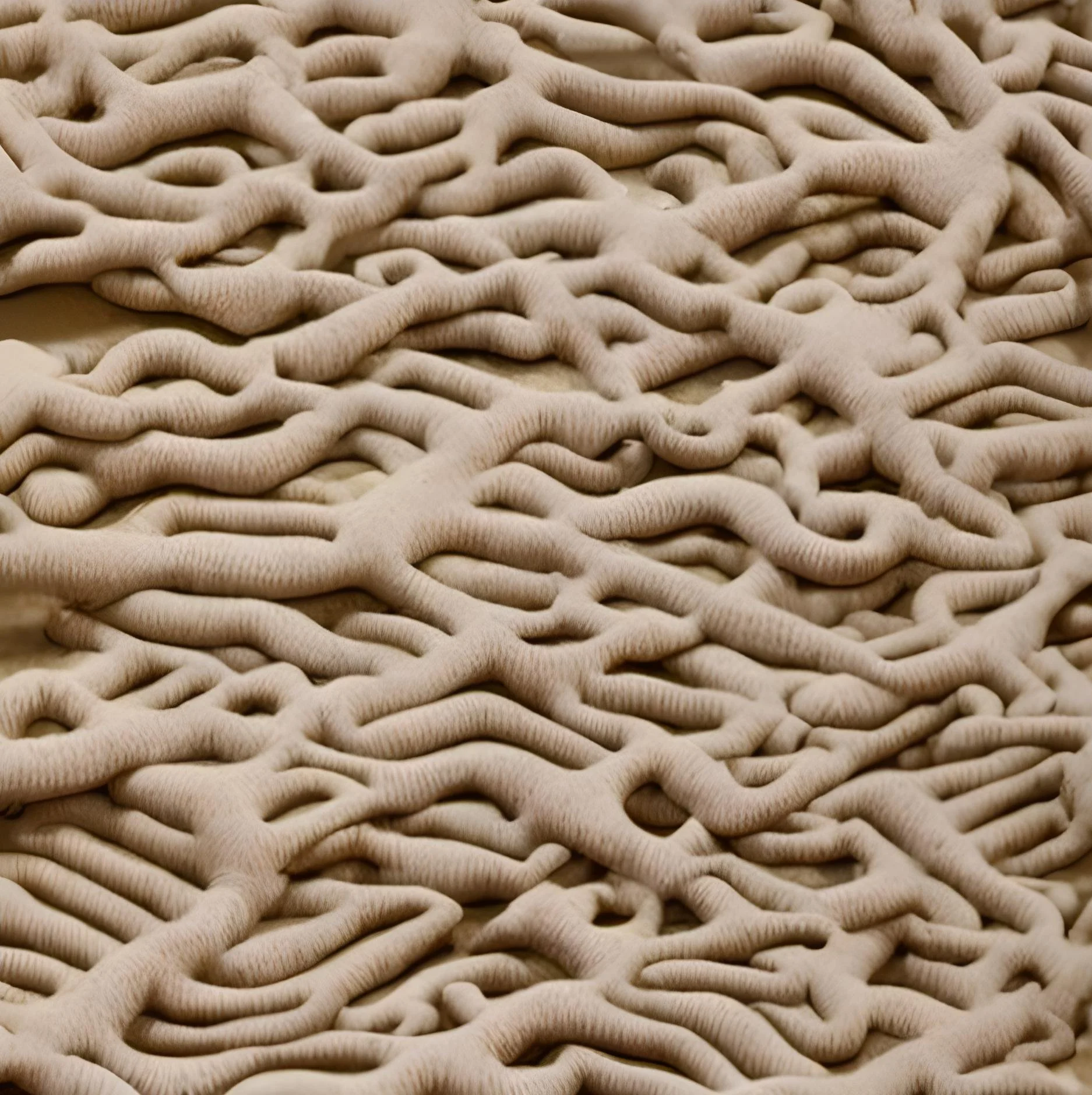

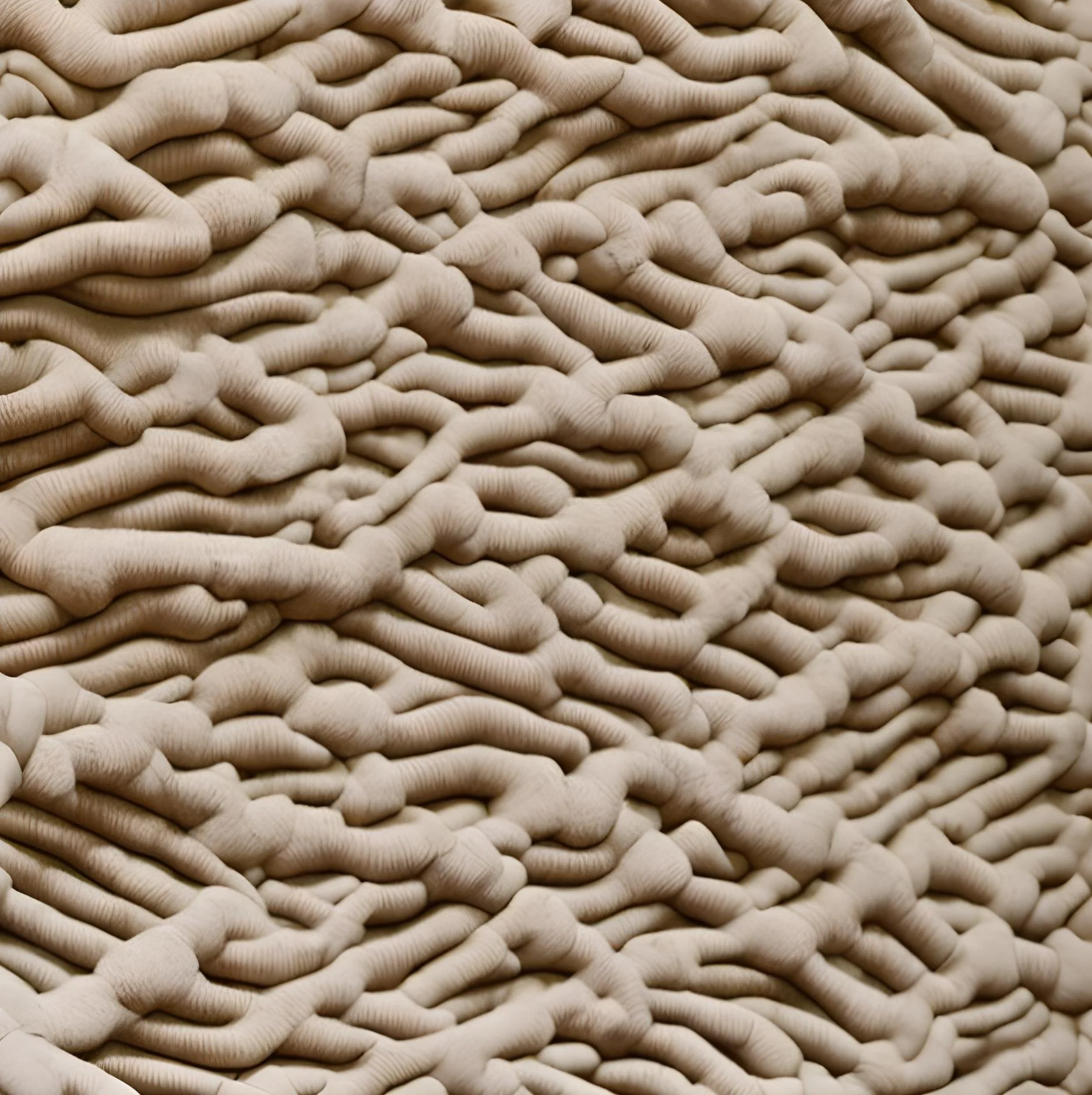

At times particular outputs caught our attention. This experiment was inspired by an image generated with the text definition of a textile and the body dataset. The challenge became an attempt to make an even more uncomfortable and mutated result. Fixating on the “human” aspects of the image, we re-ran the prompt 4 times infusing more images from the body dataset each time. This study allowed us to see how far it took to phase out the weaving/textile characteristics of the original image as it slowly became more “human”.

DATASET

How many ways can you “sit” on a chair? Everything, except the “sitting” body, is extracted from the image. The bodies become a dataset in Runway that can be used to generate new images with text or image prompts.

+

ORIGINAL IMAGE

A textile generated from the “body” dataset using the definition of textile developed by ME.

The percentages associated with the image outputs measure how much the AI model factored in the original image and the “body” dataset. The higher the percent the more “body” .

=

50%

65%

75%

100%

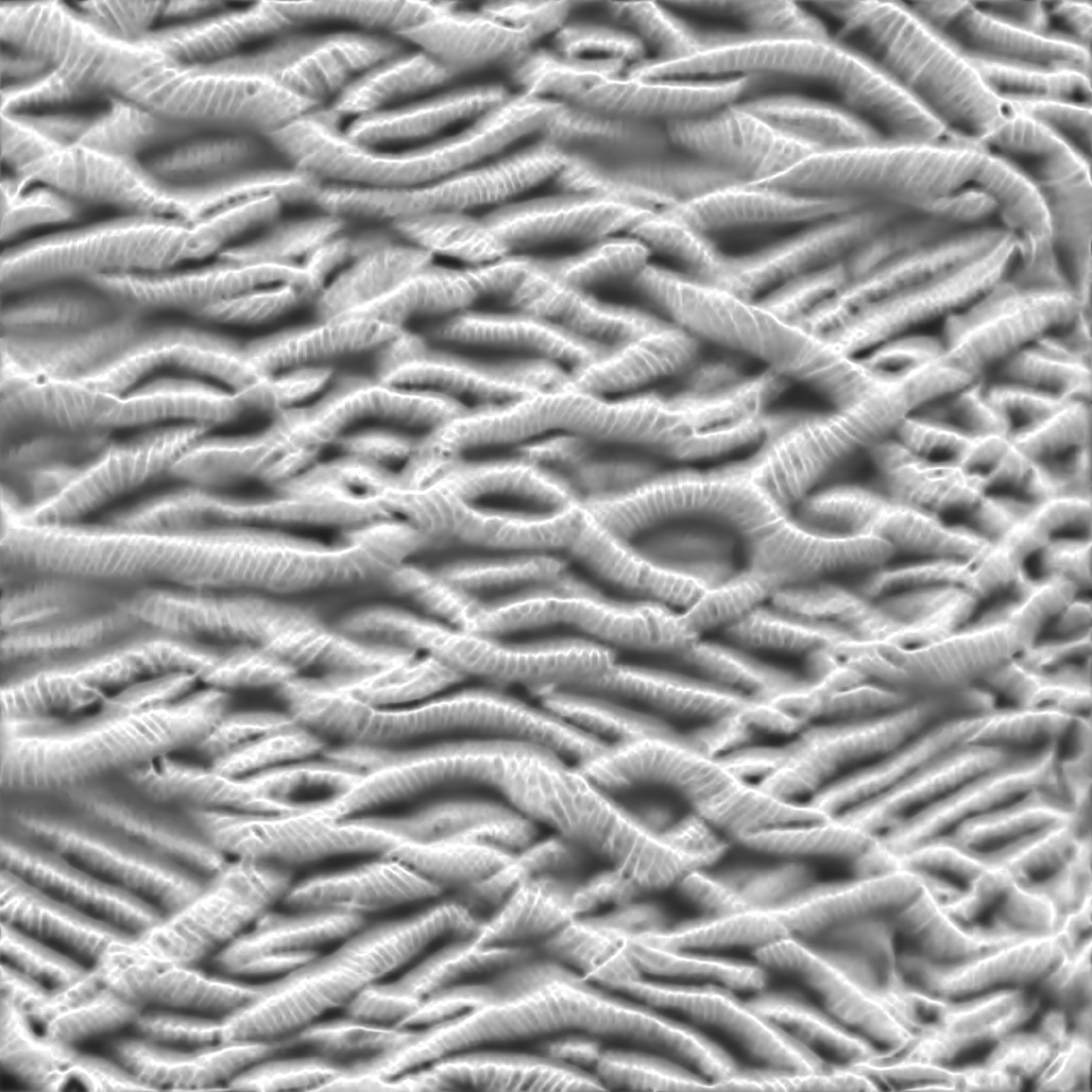

3D IMAGING

Playing with uncanny images led us to many interesting places throughout this project. Oftentimes we developed new techniques and processes to test suspicions on how an AI model would operate. For this experiment we breach the digital world and created 3d printed tiles of the images. The physical texture of the images could then serve as a surface for the digital to live on as it morphed and wiggled between states of textile and human body. This transition from image to object remains a possibility we are interested in exploring in the future.

Image maps generated by Runway used to digitally model the textile.